CPIQ

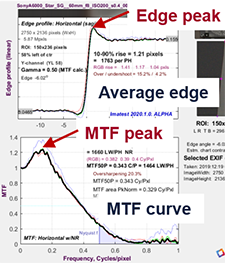

Correcting Misleading Image Quality Measurements

We discuss several common image quality measurements that are often misinterpreted, so that bad images are falsely interpreted as good, and we describe how to obtain valid measurements.

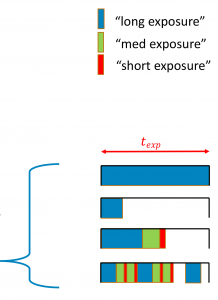

Describing and Sampling the LED Flicker Signal

High-frequency flickering light sources such as pulse-width modulated LEDs can cause image sensors to record incorrect levels. We describe a […]

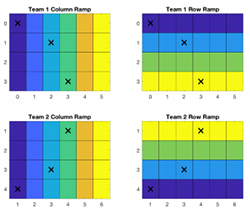

Validation Methods for Geometric Camera Calibration

Camera-based advanced driver-assistance systems (ADAS) require the mapping from image coordinates into world coordinates to be known. The process of computing […]

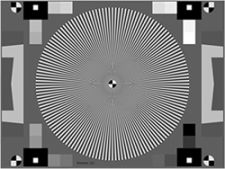

Measuring camera Shannon information capacity with a Siemens star image

Shannon information capacity, which can be expressed as bits per pixel or megabits per image, is an excellent figure of […]

Verification of Long-Range MTF Testing Through Intermediary Optics

Measuring the MTF of an imaging system at its operational working distance is useful for understanding the system’s use case […]

Reducing the cross-lab variation of image quality metrics

Abstract As imaging test labs seek to obtain objective performance scores of camera systems, many factors can skew the results.

Automating CPIQ analysis Using Imatest IT and Python

The Python interface to Imatest IT provides a simple means of invoking Imatest’s tests. This post will show how Imatest […]