Deprecated in Current Release

| Requirements | Software operation | Defining Calibration Tasks | Definitions and Theory |

|---|---|---|---|

Points over the entire image field

Fitting an accurate distortion function requires data points over the entire image space to be used. Typically, this means that for each camera there needs to be at least one image where the calibration target fills the field of view.

Note that for some devices you may not need (or be able to) fill an entire image with the target. This is typically the case with fisheye lenses which have a limited image circle in the image sensor plane.

The principal requirement remains the same, however: make sure at least some points are found out to as far in the image circle radius as your application will ultimately require.

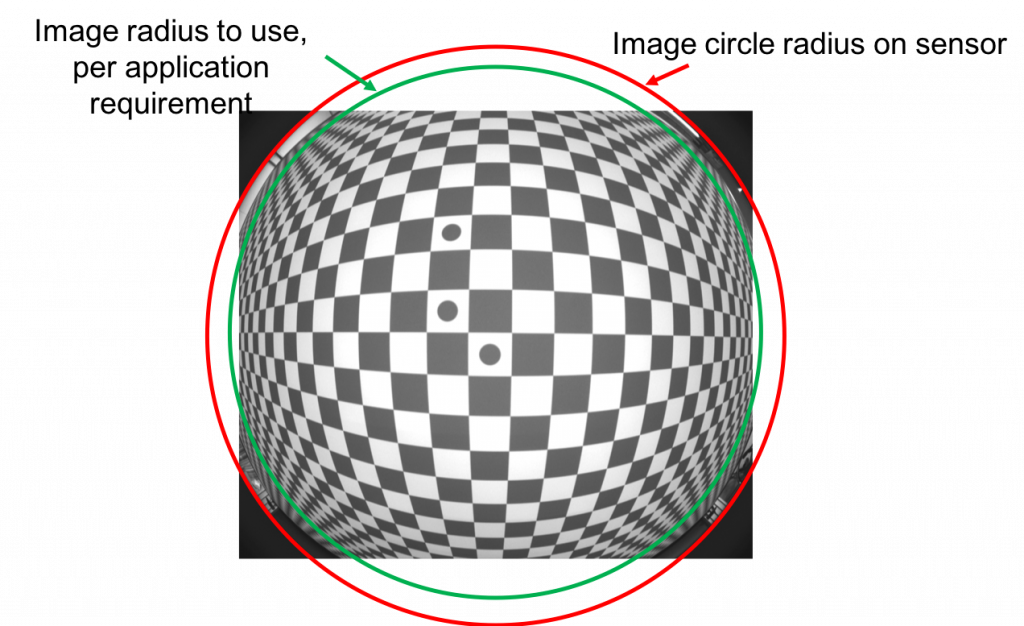

Devices tagged as fisheye can also define an image circle radius value, as seen in Figure 1, to indicate the distance (from image array center) which is required by the calibration. This typically should be inside the actual radius of complete lens fall-off, but how far inside depends on each specific application.

Figure 1, meeting the requirement of filling the maximum required image circle (green) with target points in at least one capture. In this sample, there is no image data outside of the red circle due to the extreme lensing.

Shared points across devices

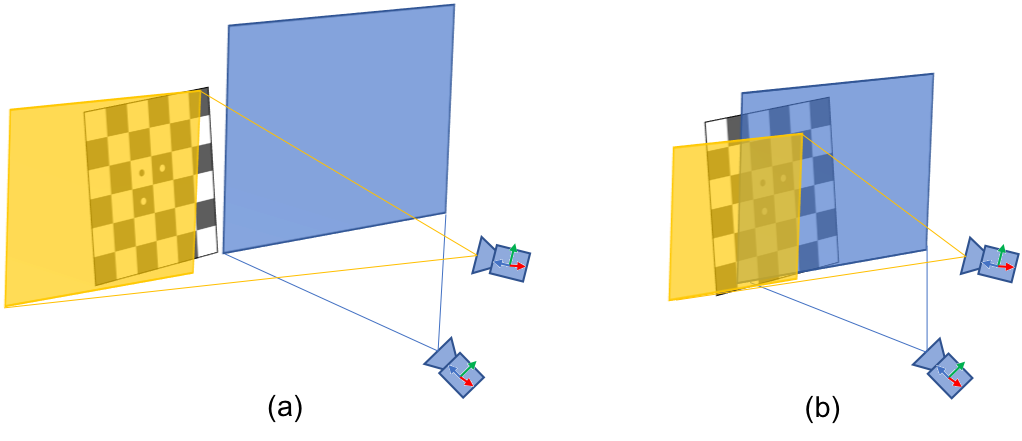

Systems with multiple cameras require that at least some capture positions exhibit target points which are visible in multiple cameras at once, as seen in Figure 2. This is required to establish the extrinsic parameters between the cameras’ positions.

Figure 2, overlapping fields of view.

(a) In this test position, the two views share no target points, and thus provide no evidence for extrinsics calibration. (This position may still be used to provide evidence for one camera.)

(b) A closer test position puts the target in an area of overlapped fields of view. Both cameras see the target center and an overlapping set of points, so these images can be used for extrinsic calibration.

The cameras must have some overlapping fields of view to meet this requirement, though not all cameras have to overlap with all other cameras. Every camera must have at least one test position where it sees some set of same points as another camera.

It is recommended that some positions feature target points which are simultaneously in as many devices’ views as possible.

Redundancy

The previous requirements describe the necessary evidence for producing a valid calibration of a system.

However, the minimal set of images and capture positions needed may not be sufficient to produce calibration results to the desired accuracy. Depending on how strict your requirements are, it may be necessary to capture more images at a greater variety of positions in order to gather additional evidence.

This helps condition the calibration estimation problem (see Calibration Procedure).